Passwords, Pirates, and Privacy Pitfalls

From shared passwords to stolen accounts: The real threats lurking in subscriptions

The Spark: Where It All Began

I was 23, just a couple of years into my full-time job, when I first stumbled upon something that would change the trajectory of my career. While most of my peers were focused on postgraduate exams—GATE, GRE, GMAT—I noticed a curious pattern. Many of my friends pooled their money together to buy one subscription for an online course and then shared the login credentials. An expensive subscription became affordable when split between three or four people.

At first, I thought of it as a clever way for students to save money. But working at an e-learning company, I saw the other side. No matter how much money we poured into marketing, conversion rates from free trials to paid subscriptions barely improved. On the contrary, the infrastructure cost for each active paid account went up. Could account sharing be the reason?

I started to observe more closely. It wasn’t just students; account sharing was happening in corporate environments, on Reddit, and OLX, where people openly sold subscriptions. I reached out to founders of subscription-based businesses and confirmed my suspicions—account sharing was a massive problem eating into their revenue.

That’s when I knew I had stumbled upon something significant. The idea for Authmetrik was born.

Cracking the Code: Finding the Perfect Solution to Account Sharing

As I spoke to more subscription-based companies, it became clear that everyone was feeling the same frustration, and the solutions they had in place weren’t solving the root problem. They were just hacks, temporary fixes that created more friction for the end users without actually preventing account sharing.

Most companies tried one or a combination of these four approaches:

No concurrent logins: The idea was simple—don’t allow multiple logins at the same time. But this didn’t work because it unfairly penalized legitimate users who wanted to access their accounts on different devices at different times. Moreover, sharers would often co-ordinate times to access accounts at different times.

Restrict access to one IP address: This solution assumed that users would only log in from one IP. People would be locked out of their accounts every time they changed networks or used a mobile device.

Restrict access to one device (browser fingerprinting): This solution tried to limit access to a specific device, but it was flawed. People use multiple devices—laptops, tablets, phones. Forcing them to stick to just one disrupted the experience. Also, browser fingerprint can easily change as per changes in system config or software locking the legitimate user on legitimate device.

Two-factor authentication (2FA): While 2FA added a layer of security, it didn’t address account sharing. People would simply share the authentication code along with the password, making this another ineffective solution.

Each of these approaches had one thing in common: they focused on restricting access in a way that frustrated genuine users. And none of them could truly stop unauthorized account sharing.

The Real Challenge: Balancing Security with User Experience

The problem was clear: companies were losing revenue from account sharing. But here’s the tricky part—any solution that prevents sharing would also work against the end user’s convenience. If you add too much friction, people will stop using the service altogether. The key challenge was to stop unauthorized access without disrupting the user experience. It had to be seamless, invisible even.

I realized I needed to go back to the fundamentals of how subscription accounts work, how users access them, and what the first point of account sharing is. That’s when I began thinking more deeply about what defines a user’s identity in the digital world.

Three Core Principles for a Solution

I broke down the solution into three fundamental principles:

What a user has (Device or IP Address): The most obvious method was tracking what device or IP address a user is logging in from. But as we’ve seen, this doesn’t work. Devices change, IP addresses change, and people are constantly switching between networks. This approach was too rigid and couldn’t adapt to modern user behavior.

What a user does (Behavior Identification): Then I thought about something more sophisticated—what if we could recognize users based on their behavior? How they interact with the platform—their content consumption, their typical usage patterns. This could be a powerful solution. But it came with its own set of challenges. We’d need massive amounts of data to train a model to recognize these behaviors, and even then, it might not scale easily across industries. For example, the way someone uses a streaming service could be very different from how they interact with a SaaS platform.

What a user is (Biometric Identification): Finally, I considered biometrics—the most personal and accurate form of identification. Fingerprints, facial recognition, and even voiceprints could solve the problem with near-perfect accuracy. But these methods also came with major downsides. They’re intrusive and add friction. Asking users to authenticate with their fingerprint or face every time they log in would significantly disrupt their experience.

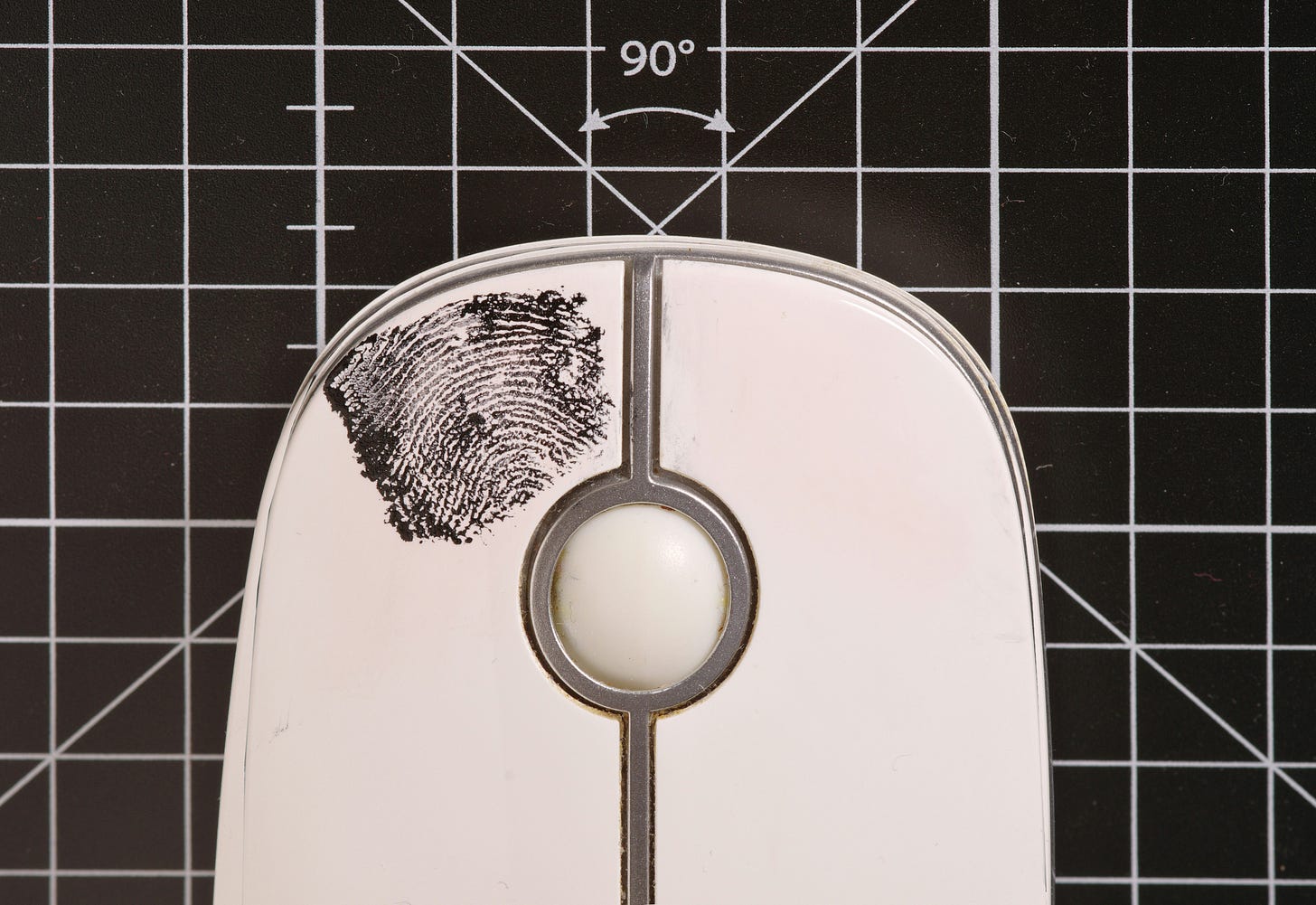

That’s when I stumbled upon a more subtle and less intrusive biometric: keystroke dynamics and mouse movement dynamics. These are behaviors that are unique to every individual but don’t require any conscious effort from the user. The way a person types on their keyboard, or how they move their mouse, are biometric traits. The beauty of this approach was that it didn’t add any friction for the user—it worked silently in the background, observing patterns and ensuring the person using the account was the one who was supposed to.

Keystroke Dynamics from World War II to Modern Times

Keystroke dynamics, surprisingly, has roots as far back as World War II. During the war, military intelligence used the unique rhythm and typing patterns of telegraph operators to identify them, even if their messages were encrypted. Each operator had a distinct way of tapping out Morse code, and this “fingerprint” helped confirm the identity of the sender. What began as a method to ensure secure communications in wartime has evolved into a modern-day biometric tool, offering a seamless way to verify identity—without the need for conscious input from the user. This is the same subtle yet powerful principle I chose to build Authmetrik around.

With this realization, the solution became clear. Authmetrik would use keystroke and mouse dynamics to silently detect whether the person accessing the account was the genuine subscriber or someone else. It would prevent unauthorized access in real-time without changing the user experience. The solution was invisible, seamless, and effective—a perfect balance between security and convenience.

Diving Deeper: The Quest to make Keystroke Dynamics work in the real world

As I delved deeper into keystroke dynamics, I found myself pouring over research papers—around 70 of them, spanning years of work. One thing became immediately clear: while the concept of keystroke dynamics was promising, the research was largely conducted in controlled environments. Participants were asked to type the same string 50 times or more to allow the system to learn their typing patterns before it could reliably validate their identity. This approach might work in a lab, but in the real world, it would be impossible. Asking users to repeatedly type out a string fifty times just to create a profile? No way. That would break the core principle of keeping the user experience frictionless.

I needed an alternative. The constraint remained the same—any solution had to be invisible to the user. Users typically enter their password twice during registration, which sparked an idea: Could we capture a keystroke signature using just those two samples? If we could, it would create the perfect balance between minimal user input and maximum accuracy. But even then, passwords alone might not be enough. Passwords are often short and repetitive, limiting the richness of the keystroke pattern we could capture.

That’s when my curiosity about human psychology came into play. I had always been fascinated by observing behaviors and understanding how people interact with technology. This curiosity led me to read more about how subtle actions, like typing patterns, could reveal deeper behavioral traits. I knew that human behavior is most authentic in routine tasks—the kind we do without thinking. And what's one thing people type consistently, with natural ease? Their email address. An email address is long enough to reveal unique typing rhythms, yet familiar enough that users wouldn’t need to alter their behavior. The subtle variations in typing speed, hesitation between characters, and the pressure applied to keys—these tiny, subconscious actions could serve as an almost perfect digital fingerprint.

So, I started studying the art and science of typing patterns more closely. Combining the technical side of keystroke dynamics with my understanding of human behavior, I realized that typing an email address during registration, not a password, would provide a richer, more reliable dataset. The email address wasn’t just a piece of login information—it was a behavioral signature.

By focusing on something users typed naturally and frequently, we could create a solution that required no change in behavior and was entirely invisible to the end user. And that’s how we began to rethink keystroke dynamics—not as a forced task, but as a subtle observation of routine behavior, captured seamlessly.

Building the AI Foundation: From basic algorithms to Machine Learning

When I set out to build Authmetrik, jumping straight into advanced AI/ML techniques wasn’t my immediate path. Instead, I focused on starting simple—understanding the fundamentals before bringing in the heavy machinery of AI. The goal was to build a solution that didn’t just detect unauthorized account sharing but did so invisibly and seamlessly, without disrupting the end user.

The First Step: Starting with Basic Algorithms

Keystroke dynamics had been studied for years, and the initial steps were clear. I began experimenting with traditional algorithms that measured typing speed, time between key presses (latency), and overall rhythm. These algorithms relied on comparing a user’s typing pattern to a baseline, flagging deviations as potential unauthorized access.

While these methods worked in controlled settings, the real world quickly threw them off balance. Users’ typing patterns varied depending on their mood, device, or environment. A slight variation in typing speed could flag a legitimate user as suspicious. It became clear that relying on rigid patterns was not only frustrating for users but also far too unreliable for any real-world application.

I needed something more flexible—something that could learn from a user’s behavior over time.

The Evolution: Moving Toward Adaptive Learning

1. Adaptive Keystroke Dynamics: One key realization led me to adaptive keystroke dynamics: the more data we had, the better the system could get at recognizing a user’s unique typing signature. However, unlike research environments where subjects typed the same string 50 times, real-world users wouldn’t have the patience for that.

This adaptive approach allowed the model to continuously improve its understanding of a user’s typing behavior over time. Each time a user entered their username or email address to log in, the system learned more. This meant we could build a solution that required minimal input while still improving accuracy with each interaction.

2. Data Acquisition & Preparation: One of the biggest challenges wasn’t just designing algorithms—it was finding the right data. Keystroke dynamics data is highly variable, and public datasets were scarce, especially for real-world conditions. This meant I had to get creative and think carefully about how data would flow at different stages of a user’s lifecycle—from registration to multiple login events.

Sourcing the Data: Real-World Meets Synthetic

To begin, I started with open-source synthetic data, the same type that had been used in prior research studies. However, most of this data was collected in controlled settings, where users typed the same string 50 times, which wasn’t feasible for a live system. No user would type something 50 times to build a profile. So, I adapted the data to simulate a real-world environment, where users typically input their email or password once during registration and at each login thereafter. This required transforming the data into a stream that mirrored the sporadic inputs of a user, providing the system with a realistic flow of data over time.

Adapting Models for Different Stages of User Interaction

The challenge with keystroke dynamics is that the accuracy of identifying a user improves as more data is collected. But when a user first registers, the data is sparse—just one or two samples—requiring different models at each stage of interaction.

Registration Stage: During registration, we usually had only two samples—the user typing their email address or password twice. At this stage, advanced machine learning models weren’t practical because there wasn’t enough data to train them. Instead, we used basic algorithms and lightweight models that captured a rough typing signature, even with minimal input.

Early Login Stage: After a few login events, when we had collected five to ten samples from the user, we moved toward supervised machine learning. With each new login attempt, the system refined its understanding of the user’s typing behavior. This adaptive approach allowed the model to improve accuracy incrementally with each interaction, remaining flexible enough to account for natural variations in typing patterns.

Established User Stage: Once we had 20 or more samples—after several login events—the system had enough data to move beyond basic models. This is when we introduced unsupervised machine learning. With a rich dataset of the user’s typing patterns, we could train the model on more complex features. These unsupervised models significantly boosted accuracy by identifying deeper, more refined patterns in the user’s behavior.

Ensemble Methods: Improving accuracy across different stages

Given the diversity of typing behaviors across different stages, it became clear that a one-size-fits-all model wouldn’t suffice. Each stage presented unique challenges, and the system needed to handle both new users with limited data and established users with richer interaction histories.

To address this, we introduced ensemble methods, which helped combine various models that could accurately identify users across different stages of interaction. Ensemble models allowed us to blend specialized models designed for each stage—registration, early logins, and established users. By combining their outputs, or “voting” on whether a user was legitimate, these models worked in concert to significantly improve overall accuracy and reduce false positives and negatives.

The 84% Accuracy Challenge

Despite the iterative improvements, our models consistently achieved 84% accuracy. While this is a commendable result, it wasn’t sufficient for industry applications. An 84% accuracy rate meant that still a large number of legitimate users could be falsely flagged, leading to a frustrating user experience. On the other hand, failing to detect unauthorized users posed a significant risk to subscription businesses.

Industry standards typically required accuracy rates closer to 95% or higher. This limitation showed that while keystroke dynamics provided a strong foundation, they alone weren’t enough to meet the required accuracy levels for large-scale, real-world applications.

Understanding Human Behavior: The key to unlocking accuracy

My curiosity about human behavior had always been a driving force behind the decisions I made in building Authmetrik. I had already spent countless hours studying typing patterns and how subtle differences in keystrokes could tell us so much about a person. But as I dove deeper into the challenge of preventing unauthorized account sharing, I realized that understanding how people use these accounts—their behaviors beyond just typing—could reveal even more.

It started with a simple observation. If someone was going to share an account, they would probably exhibit certain behaviors before and during the sharing. Take free trials, for example. Users who share accounts often exhaust the free trial first, testing the service before they hand off their credentials to others. This pattern was subtle but common in sharers, especially when compared to legitimate users who often convert earlier or extend their trial with payment.

Once I started paying closer attention to these kinds of behaviors, I noticed other patterns that suggested account sharing:

Multiple Devices in Different Locations: A genuine user might access their account from a few trusted devices—laptop, phone, maybe a tablet. But sharers often have multiple, geographically dispersed devices logging in, and the access times overlap in ways that legitimate users would rarely exhibit.

Inconsistent Usage Patterns: Another flag came from usage behavior itself. Legitimate users tend to have predictable usage times, often related to their schedules—early mornings or evenings after work. Sharers, on the other hand, may log in at random hours because multiple people are accessing the account.

These behavioral clues triggered something deeper in me. My curiosity about human psychology pushed me to think: What other behaviors could reveal unauthorized access? It wasn’t just about catching a pattern of typing; it was about understanding the intent behind how users interacted with the system. This led me to a deeper analysis of user behavior, where I started mapping out potential account-sharing behaviors.

Discovery: The 30+ behavioral attributes of Account Sharing

I began rigorously studying how different types of users—both legitimate and sharers—engaged with subscription-based services. By observing how they interacted with the platform, I gradually uncovered 30+ distinct behavioral attributes that were commonly linked to account sharing.

Some of these included:

Time of First Login: Sharers often wait until after the free trial ends to start sharing the account, whereas legitimate users frequently log in earlier and more consistently.

Frequency of Logins: Legitimate users tend to log in at regular intervals, reflecting their daily or weekly routines. In contrast, shared accounts often have erratic login patterns, as different people access the account at random times.

Device Handovers: In shared accounts, you can observe a clear handover between devices—a quick log out from one and a log in from another device within a short time window. This is especially noticeable across different IP addresses, suggesting different users in different locations.

Usage Duration: Legitimate users tend to have predictable session lengths that match their engagement with the service. Sharers, however, might exhibit short bursts of activity on one device while a completely different usage pattern emerges on another device soon after.

As I compiled these attributes, I realized that combining these behavioral indicators with keystroke dynamics could provide a holistic view of a user’s behavior. While typing patterns alone could reveal much about the person typing, these broader behavioral insights added a new layer of understanding.

Extending the Algorithm: Integrating Behavioral Attributes

Once I identified these 30+ behavioral attributes, the next step was to extend Authmetrik’s algorithm to include them alongside the existing keystroke dynamics. The challenge was in developing a model that could weigh the importance of each behavioral attribute against typing patterns, creating a composite score that could better detect unauthorized account sharing.

The algorithm now took into account not just how users typed, but how they used the platform:

Keystroke Dynamics: Still at the core, capturing typing patterns.

Behavioral Attributes: Now layered on top, analyzing broader behaviors like login timing, device usage, session length etc.

The more attributes we added, the better the system became at flagging unauthorized use. The combination of both micro (keystroke dynamics) and macro (behavioral) data created a powerful detection system.

The Breakthrough: Reaching 93% Accuracy

Once these new behavioral attributes were integrated into the algorithm, the results were staggering. The system’s accuracy jumped from 84% to 93%, a significant leap that brought us closer to industry-standard levels. The key was that by understanding how account sharers behaved—not just how they typed—we could identify them much more accurately.

The Evolution of 2FA: From static codes to adaptive, Behavior-Driven Dual Defense

Even with the remarkable improvement to 93% accuracy, the reality was that it still wasn’t perfect. While this accuracy level was a significant leap, it meant that some users could still encounter false positives—legitimate users being flagged as unauthorized. This would inevitably lead to a frustrating user experience, and preventing that was essential.

To address this, we needed an extra layer of protection—one that could safeguard both the business and the end user without creating additional friction. That’s when I turned to the idea of implementing Two-Factor Authentication (2FA). But this wouldn’t be your typical, easily bypassed 2FA where users simply input a code sent to their phone. We needed something smarter.

The Birth of Behavior-Driven Dual Defense

To take Authmetrik to the next level, we developed what I called Behavior-Driven Dual Defense. This wasn’t just a traditional second-factor method; it was a context-aware, behavior-driven form of 2FA. The system didn't just rely on a code—this authentication method analyzed user behavior in real time and factored in the overall risk profile of the account before granting access.

The key insight here was that sharers can easily pass traditional two-factor authentication by simply sharing the code, much like they share login credentials. But our Dual Defense was designed to be smarter than that. Instead of just relying on a simple, static code, Dual Defense dynamically adjusted based on the account's behavior and risk level, using additional behavioral attributes to strengthen the authentication process.

How Dynamic Behavioral Authentication Worked

Here’s how Dual Defense built on top of the behavioral insights already in place, adding a new layer of sophistication:

Behavioral Risk Assessment: Each account already had a risk score based on the keystroke signature and 30+ behavioral attributes we tracked. If a user's behavior began deviating from their typical patterns, this risk score would rise.

Contextual Two-Factor Authentication: When an account’s risk level crossed a certain threshold, instead of immediately blocking access, Dual Defense initiated a second authentication step. But instead of just sending a code, this step evaluated the context in which the request was being made. Was the user trying to log in from a new device or an unfamiliar location? Was this happening at a time that didn’t align with their typical usage?

Dynamic Response Based on Behavior: Depending on the account’s behavioral risk, Dual Defense would either grant access, ask for additional verification, or deny access altogether. For example, if the system detected suspicious behavior (such as multiple logins from different devices in short succession), it might ask for verification through a different channel. But for low-risk situations, the user might not even notice the second factor being triggered—it would happen seamlessly in the background.

Learning Over Time: Like the core algorithm itself, Dual Defense was adaptive. It learned from the user's behavior over time. If a legitimate user was flagged once due to unusual behavior but successfully passed Dual Defense, the system would remember this and adjust the risk profile accordingly, reducing the chance of future false positives.

Reducing False Positives and Improving User Experience

The beauty of Dynamic Behavioral Authentication was that it didn’t just add another layer of security—it also improved the user experience. Traditional two-factor methods can be annoying, especially when they’re triggered unnecessarily. But by tying authentication to behavioral insights, Dual Defense ensured that only truly suspicious activities were flagged, reducing friction for the vast majority of legitimate users.

At the same time, the system became more resilient against sharers. Simply sharing a code wasn’t enough anymore; unauthorized users would also need to mimic the original account holder’s behavior—a much harder task.

With Dual Defense in place, Authmetrik took a significant step toward creating a seamless, highly secure system that balanced security with user convenience. While 93% accuracy was impressive, Dual Defense filled in the remaining gap, reducing false positives and improving security even further.

Conclusion: The Real Problem Behind Account Sharing

Authmetrik’s journey taught me that the problem wasn’t just about preventing unauthorized access—it was about understanding why people shared accounts. The real opportunity lay in converting these sharers into paying customers. With 93% accuracy and Dynamic Behavioral Authentication, we had developed an effective solution. But the next step was to turn account sharers into subscribers.

In the next post, I’ll dive into how the analytics we built not only detected sharers but also helped businesses convert them into paying customers. I’ll also share the story of how I worked with a SaaS company to boost their revenue by 60% by converting the top 30% of their sharers into subscribers.

Stay tuned.